ENN543 Data Analytics and Optimisation Semester 2, 2019

Assignment

ENN543, Data Analytics and Optimisation, Semester 2, 2019

This document sets out the three (3) questions you are to complete for Assessment. The assignment is worth 30% of the overall subject grade. Weights for individual questions are indicated throughout the document. Students are to work in small groups (2-3) and submit their answers in a separate, single document (either a PDF or word document), and upload this to TurnItIn.

The submitted answers should be written in the sytle of a research report, and outline the methods investigated (and the rationale behind selecting them), how the evaluation was structured (i.e. training and testing splits, etc), the results obtained and the conclusions.

One submission should be made per group. Further Instructions:

- Data required for this assessment is available on blackboard in ENN543 Assessment 2 Data.zip, alongside this document.

- As part of the submission, students should provide a brief table of contributions to outline the contribution of each group member. This table should be signed by all group members to signal agreement. Note that all group members will be assigned the same mark unless one or more members of the group explicitly request that marks be moderated based on contributions.

- Matlab code or scripts (or equivalent materials for other languages) may be submitted as supplementary material or appendices, however note that this will not be directly marked, and will only be used if there are ambiguities.

- Figures and outputs/results that are critical to the answer should be included in the main response.

- Students who require an extension should lodge their extension application with HiQ

(see http://external-apps.qut.edu.au/studentservices/concession/). Please note that teaching staff (including the unit coordinator) cannot grant extensions.

Problem 1. Subject Invariant Activity Recognition (30%). A common problem when performing classification and/or recognition on human behaviours, for example speech or activity recognition, is that different people will perform the same behaviour in different ways. In the case of speech this may manifest as different accents, while in the case of activity recognition differences between subjects may be driven by differences in how they move their body to perform the target action. To cope with such variations, models are typically trained on many subjects in the hope that they can capture the different possible manners in which the target behaviours are performed, such that the model can generalise to unseen subjects.

The Task

You are to investigate how feasible it is to use a single chest mounted accelerometer to recognise the type of activity being performed by a person. You have been provided with data for 15 subjects (see 1.csv, 2.csv, 3.csv, ... 15.csv in the Q1 directory of the assignment data archive). Each subject performs seven activities:

- Working at Computer

- Standing Up, Walking and Going up/down stairs

- Standing

- Walking

- Going Up/Down Stairs

- Walking and Talking with Someone

- Talking while Standing

The data consists of five columns which are (in order):

- A sequential index, which should be ignored

- X acceleration

- Y acceleration

- Z acceleration

- Activity type, which is your model target

Using this data, you are to develop a model to recognise the activities of an unseen subject, i.e. having trained the model on a selection of subjects, consider how well the model recognsises the activities of a subject who as been held out of the training set.

In addressing this problem you are free to select your own approach (from those covered in lectures and tutorials) to recognise the actions. Your answer should explain the method you have chosen to use, and provide justification your choice. You may optionally wish to include small scale experiments to support your decision. The training and testing splits used should be documented, and you should ensure that you evalaute your proposed approach on multiple subjects. Your answer should provide an analysis and discussion of your models performance, should identify situations where the model fails (and if possible reasons for this failure), and should determine if performance is consistent across all subjects.

Problem 2. Recognising a Person’s Age from Their Face (30%). Age estimation is a widely studied task relating to facial recognition, with applications in domains such as biometrics, and human computer interaction. Estimating age from facial images suffers from many of same challenges as face recognition, such as variations in appearance caused by pose, lighting, and facial accessories (i.e. glasses) or facial hair. Much like facial recognition, a critical pre-processing step for age estimation is to localise and align the face, such that all examples are as consistent as possible in terms of the location of major landmarks such as the eyes and nose.

The Task

You are to develop a method to estimate the age of a subject from a facial image. The file UTKFace.zip in the Q2 directory within the data archive contains the aligned and cropped face images from the UTKFace dataset. This archive contains 20,000+ colour face images, all of which have been cropped and aligned in preparation for further processing. A selection of example raw images (i.e. uncropped images) are shown in Figure 1.

Figure 1: Example raw images from UTKFace. Note that the supplied cropped and aligned images contain only the face regions.

Faces in the archive are named as follows: [age] [gender] [race] [datestamp].jpg, where:

- [age]: an integer from 0 to 116, indicating the age;

- [gender]: either 0 (male) or 1 (female);

- [race]: an integer from 0 to 4, denoting White, Black, Asian, Indian, and Others (like Hispanic, Latino, Middle Eastern);

- [datestamp]: date and time stamp, in the format of yyyymmddHHMMSSFFF, corresponding to the date and time an image was collected to UTKFace.

The [age] value is to be the primary response of your model. You may use or ignore the other variables as you choose.

In addressing this problem you are free to select your own approach (from those covered in lectures and tutorials) to determine age. Given the large size of the database, you are welcome to down-sample the images to a lower resolution, though be aware that if you are too aggressive in your down-sampling you may lose the ability to estimate age.

Your answer should explain the method you have chosen to use, and provide justification your choice. You may optionally wish to include small scale experiments to support your decision. Any approaches to use to modify the data should be documented, as should the training and testing splits. Your answer should also provide an analysis and discussion of your models performance, including identifying situations where the model fails and possible reasons for the failures.

Problem 3. Classifying Digits (40%). The MNIST dataset is a widely established benchmark dataset in computer vision, and recent machine learning methods can achieve almost perfect performance on the dataset. Despite this, digit, and more broadly character recognition still poses a challenge as many datasets have far greater variability than is observed in MNIST. One of the main challenges for methods stems from the within class variability that occurs due to changing conditions. If we consider recognising numbers or characters in natural scenes, we have changes in camera pose, lighting (which impacts brightness, contrast, and the presence of shadows), camera white balance, resolution and changes in the style of font used to render the character. The wide variety of conditions makes recognition challenging, and as such it is desirable to have methods that can generalise to unseen conditions.

Such generalisation can be achieved in a number of ways, with more complex classifiers (such as deep convolutional neural networks) and feature extraction techniques that seek to extract salient features that are common across different domain variations being popular approaches.

The Task

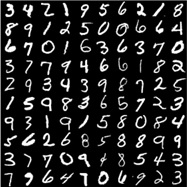

You are to investigate how well classifiers trained using different methods generalise across databases. In this question you will train on the MNIST database, and test on both MNIST and the Street View House Numbers (SVHN) database. Examples from the two databases are shown in Figure 2.

(a) MNIST

(b) SVHN

Figure 2: Examples from the MNIST (left) and SVHN (right) datasets.

To complete these question you have been supplied with the following data files (all in the Q3 directory of the data archive):

- mnist train.mat: The MNIST training set. Data within this file should be used to train all models.

- mnist test.mat: The MNIST testing set. This file should be used to evaluate the developed models on ‘in domain’ data. Data in this file should not be used for model training.

- svhn test.mat: The SVHN testing set. This file should be used to evaluate the developed models on ‘out of domain’ data. Data in this file should not be used for model training. Note that for consistency with the MNIST data, the images in svhn test.mat have already been converted to greyscale.

Each of these data files contains two variables:

- imgs: A 32 × 32 × N matrix, where N is the number of samples. Each 32x32 matrix is a single instance of an image.

- labels:A 1 × N vector, where N is the total number of samples. Each entry is the label for the corresponding image in imgs.

Using this data you should explore how various classifiers and dimension reduction techniques can impact performance, and the ability of a model to generalise. In particular you should investigate:

- SVMs and CKNN classifiers using both raw data (i.e. pixel-values) and data that has had dimensionality reduction applied;

- Deep Convolutional Neural Network classifiers;

- Principal Component Analysis, Linear Discriminant Analysis and Deep Auto-Encoders as dimensionality reduction methods.

Performance on both the ‘in domain’ MNIST testing data and the ‘out of domain’ SVHN testing data should be considered in your evaluation and analysis.

Note that for some methods it may not be computationally feasible to train on all data. In this case, you should decimate the data to obtain a representative sample that allows you to train and evaluate the model. In such cases you should document how the dataset was reduced.

Assignment Service Australia | CDR Writing Help | TAFE Assignment Help | Perth Assignment Help | Melbourne Assignment Help | Darwin Assignment Help | Adelaide Assignment Help | Assignment Help Victoria | Sydney Assignment Help | Canberra Assignment Help | Brisbane Assignment Help | CDR for Australian immigration | Course For Australian History