IMGS 682 homework 4

Instructions

Your homework submission must cite any references used (including articles, books, code, websites, and personal communications). All solutions must be written in your own words, and you must program the algorithms yourself. If you do work with others, you must list the people you worked with. Submit your solutions as a PDF to the Dropbox Folder on MyCourses.

Your homework solution must be prepared in LATEXand output to PDF format. I suggest using http://overleaf.com or BaKoMa TEXto create your document.

Your programs must be written in either MATLAB or Python. The relevant code to the problem should be in the PDF you turn in. If a problem involves programming, then the code should be shown as part of the solution to that problem. One easy way to do this in LATEXis to use the verbatim environment, i.e., \begin{`verbatim`} YOUR CODE \end{`verbatim`}

If you have forgotten your linear algebra, you may find the Matrix Cookbook useful, which can be readily found online. You may wish to use the program MathType, which can easily export equations to AMS LATEXso that you don’t have to write the equations in LATEXdirectly: http://www.dessci.com/en/products/mathtype/

If told to implement an algorithm, don’t just call a toolbox.

Problem 1 - Clustering

In this problem you will cluster two datasets of 2-dimensional points that are given in the files pnts1.txt and pnts2.txt. In both files, the first column is the x-dimension and the second column is the y-dimension.

You may use clustering code built into libraries and toolboxes.

Part 1 - k-means (6 points)

Use k-means to cluster pnts1.txt and pnts2.txt. Use values of k = 3, k = 5, and k = 7. You may use the toolbox of your choice. I suggest using the k-means implementation in the VLFeat toolbox because it can handle the larger datasets we will use in later problems. Both the MATLAB and VLFeat toolboxes support using k-means++, which may give better results (read the documentation).

Output your results as a 2×3 image array, where the first row has the results for pnts1.txt and the second contains the clusters for pnts2.txt. Use a distinct color for each cluster assignment, and plot the cluster centers as well as larger triangles of the same color.

Solution:

IMAGE HERE

CODE HERE

Part 2 - Mean Shift (6 points)

Use Mean Shift to cluster pnts1.txt and pnts2.txt. Use bandwidth values of h = .05, h = .1, and h = .5. Python users may use the toolbox of your choice. MATLAB users should use the provided function MeanShiftCluster.m

Output your results as a 2×3 image array, where the first row has the results for pnts1.txt and the second contains the clusters for pnts2.txt. Try to use a distinct color for each cluster assignment, but this may not be possible for some bandwidth settings. Plot the cluster modes as larger triangles of the same color.

Solution:

IMAGE HERE

CODE HERE

Part 3 - Clustering with the Method of Your Choice (6 points)

Find code for a clustering algorithm of your choice, e.g., Normalized Graph Cuts or other spectral clustering methods, DBSCAN, Agglomerative Clustering, etc. I suggest using a method that does not require selecting the number of clusters explicitly. Use three difference choices for parameters, and show the results. Output your results as a 2×3 image array, where the first row has the results for pnts1.txt and the second contains the clusters for pnts2.txt. Try to use a distinct color for each cluster assignment, but this may not be possible for some parameter settings. Discuss what algorithm you used, the parameter settings you tried, and where you got the code for it.

Solution:

DISCUSSION HERE IMAGE HERE

CODE HERE

Problem 2 - Image Segmentation

We are going to first write some helper functions to get a segmentation out of our clustering algorithms. Then, we will put together the pieces to segment some images.

Part 1 - Preparing the image for clustering (3 points)

Write a function F = getImageFeatures(img, colorSpace, types) that takes in a n × m×3 RGB image img, a flag indicating what color space should be used colorSpace, and an integer flag types that indicates what kind of features to extract as follows:

- If types= 1, then output a c × nm matrix containing each pixel’s color value in the color space selected.

- If types= 2, then output a (c+2)×nm matrix containing each pixel’s color value in the color space selected augmented with the spatial (x,y) coordinates of each pixel.

- If types= 3, then output a (c + 5) × nm matrix containing each pixel’s color value in the color space selected augmented with the spatial (x,y) coordinates of each pixel, the x− and y−image gradients (use the filter size of your choice or have it as another parameter) computed on a grayscale image or the L channel for LAB, and the gradient magnitude.

For color spaces, allow the options: RGB (c = 3), Grayscale (c = 1), LAB (c = 3), and the colorspace of your choice. You may use a toolbox to do the conversions.

Before returning, the function should normalize each row of F by dividing the the standard deviation of the row to ensure that the values are on a similar scale.

You only need to give code here, but you will use this function later.

Tip for reshaping an image x: y=reshape(shiftdim(x, 2),[3, size(x, 1)*size(x, 2)]); Solution:

CODE HERE

Part 2 - Segmentation visualization (4 points)

Write a function sImg = colorSegmentedImage(img, seg) that takes in an image img and a segmentation mask seg. The function outputs a new image with each segmented region containing the average color of that region in the original image.

You only need to give code here, but you will use this function later.

Solution:

CODE HERE

Part 3 - Segmentation with k-Means (10 points)

Write a function seg = kMeansSegment(img, k, colorSpace, types) that takes as input a n×m×3 images img, the k > 1 for use in k-Means, and the colorSpace and types parameters are the same as those in the function getImageFeatures. The function will output a n×m integer image containing the assignment of each pixel to each cluster.

Now, put all of your pieces of code together to segment the images fruit.jpg and bears.jpg. Use the function colorSegmentedImage you wrote earlier to visualize each segmentation. For each image, you must visualize three different interesting parameter settings, with one setting chosen to optimize the segmentation into semantically reasonable segments. Make sure to label the images with the parameters chosen, and display each as a 1 × 3 labeled figure with the segmentation.

You may use smaller images when debugging your system to make it faster, and if necessary you can use them throughout. Just explain what you are doing.

Solution:

FRUIT SEGMENTATION HERE

BEAR SEGMENTATION HERE

CODE HERE FOR YOUR FUNCTION. SHOULD BE ABOUT FOUR LINES BY CALLING THE OTHER FUNCTIONS

Part 4 - Segmentation with Mean Shift (10 points)

Write a function seg = meanShiftSegment(img, bandwidth, colorSpace, types) that takes as input a n×m×3 images img, a floating point value for bandwidth that indicates the window size to use with Mean Shift, and the colorSpace and types parameters are the same as those in the function getImageFeatures. The function will output a n × m integer image containing the assignment of each pixel to each cluster.

Now, put all of your pieces of code together to segment the images fruit.jpg and bears.jpg. Use the function colorSegmentedImage you wrote earlier to visualize each segmentation. For each image, you must visualize three different interesting parameter settings, with one setting chosen to optimize the segmentation into semantically reasonable segments. Make sure to label the images with the parameters chosen and the number of modes found, and display each as a 1 × 3 labeled figure with the segmentation.

You may use smaller images when debugging your system to make it faster, and if necessary you can use them throughout. Just explain what you are doing.

Solution:

FRUIT SEGMENTATION HERE

BEAR SEGMENTATION HERE

CODE HERE

Part 5 - Comparison (6 points)

Discuss how well each of the methods worked on the two images. What are the pros and cons of each method in your opinion? How did changing the color space and the features influence the segmentations?

Solution:

TEXT HERE

Problem 3 - Object Recognition

In this problem we will do image classification using the Oxfort Pet Dataset. The dataset consists of 37 categories with about 200 images in each of them. To make your life easier, I parsed the files for you and split them into train (PetsTrain.mat) and test (PetsTest.mat) sets. The file names are in the files field and the labels are in the label field.

Part 1 - Gaussian Color Space (3 points)

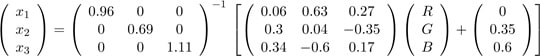

Write a function out = rgb2gaussian(img) that converts an image from RGB colorspace to normalized Gaussian colorspace. If your RGB pixels are between 0 and 1, the transformation from RGB to normalized Gaussian color space is given by:

Convert the image peppers.png to normalized Gaussian color space and display the image as an RGB image. The colors will be weird, and the garlic will look a bit orange.

Solution:

IMAGE HERE

CODE HERE

Part 2 - Simple Classifiers (6 points)

Write a function y = img2vec(fname, colorspace) that loads an image, resizes it to be 32 × 32, and changes the colorspace to either grayscale, RGB, or Gaussian. Write a script that uses img2vec along with methods from Homework 1 to train a nearest neighbor classifier with Euclidean distance and a linear classifier of your choice on the Pet dataset. Compute the mean-per-class accuracy across the 37 categories. Train on all of the data. You should convert your images to single precision.

Show your results as a table for all three colorspaces. Make sure to mention what linear classifier you used and the size of the images. I suggest using a Support Vector Machine or Logistic Regression. You may use a toolbox.

You don’t need to give us the code for the classifiers, since you will be calling toolboxes or code you wrote earlier. Make your code short and robust so that you can just switch two flags, one for the choice of classifier and one for the choice of color representation.

Report mean-per-class accuracy, i.e., the mean of the diagonal of a normalized confusion matrix. Chance is 2.7%. Using RGB with nearest neighbor your accuracy should be very close to 6.9% if you did everything correctly.

Solution:

LINEAR CLASSIFIER TYPE MENTIONED

CODE HERE

Table 1: Results as mean-per-class accuracy (%) without extracting features.

Grayscale RGB Gaussian

Nearest Neighbor ?% ?% ?%

Linear Classifier ?% ?% ?%

Part 3 - Bag of Visual Words (10 points)

Implement a bag-of-visual-words feature representation and use it to classify the Pet dataset. To do this, extract dense SIFT descriptors from each image. You should use VLFeat or OpenCV for this. Here are the steps:

- Extract dense SIFT descriptors from 100 images randomly chosen from the training dataset.

- Use whitened PCA to reduce the dimensionality of the SIFT descriptors you extracted (use 80 for grayscale and 120 for the others).

- Cluster the descriptors using k-means into a dictionary of 600 elements.

- Extract a collection of dense descriptors for each image and then use the dictionary to turn them into a bag-of-words histogram.

- Use the bag-of-words features to train nearest neighbor and linear classifiers.

Do this for grayscale, RGB, and Gaussian color spaces. To handle the chromatic color spaces, run SIFT three times and concatenate the descriptors[1]. For nearest neighbor, use the χ2 distance instead of Euclidean, which was described in Homework 3.

MATLAB users: Transform your bag-of-words histogram using a homogeneous kernel map to enable χ2 similarity to be used. In VLFeat, you can use the vl homkermap function for this. Some code for doing this is given here: http://www.vlfeat.org/applications/caltech-101-code.html . That link contains just about all the code you need except you will need to do some work to use the Gaussian colorspace and to use nearest neighbor.

Python users: You can skip using the homogeneous kernel map step, unless you want to implement it or can find code online.

Solution:

CODE HERE

Table 2: Results as mean-per-class accuracy (%) without extracting features.

Grayscale RGB Gaussian

Nearest Neighbor ?% ?% ?%

Linear Classifier ?% ?% ?%

Part 4 - Using Pre-Trained Deep CNNs (6 points)

For this problem you will use a pre-trained neural network and you will use it as a feature extractor for the images. Run the model on peppers.png and output the labels of the top-3 predicted categories.

MATLAB Users: You will need to use the MatConvNet toolbox for this: http://www.vlfeat.org/matconvnet. If your computational resources are limited, you should use imagenet-vgg-f. If you have more resources and time, consider using imagenet-vgg-verydeep-16. A tutorial for doing this with MatConvNet is provided here (you just need to change the last few lines to get three predictions instead of only one): http://www.vlfeat.org/matconvnet/quick/

Python Users: You may use the toolbox of your choice, but I suggest using Keras, TensorFlow, or Caffe. VGG16 is available for Caffe at the model zoo, and for Keras here: https://gist.github.com/baraldilorenzo/07d7802847aaad0a35d3 .

Solution:

LIST OF LABELS IN ORDER FROM HIGHEST PROBABILITY TO SMALLEST

CODE HERE

Part 5 - Deep Transfer Learning (10 points)

Rather than using the final ‘soft-max’ layer of the CNN as output to make predictions, instead we will use the CNN as a feature extractor to classify the Pets dataset. For each image grab features from the last hidden layer of the neural network, which will be the layer before the 1000-dimensional output layer (around 2000–6000 dimensions). Using MatConvNet, you may need to set the ‘precious’ flag for that layer to get those features. You will need to resize the images to a size compatible with your network (usually 224×224×3). If your CNN model is using ReLUs, then you should set all negative values to zero.

After you extract these features for all of the images in the dataset, normalize them to unit length by dividing by the L2 norm. Train a linear classifier with the training CNN features, and then classify the test CNN features. Report mean-per-class accuracy.

Solution:

CODE HERE

Bonus Problem 1 - Video Classification (10 points)

For this problem, you will need to download the dogcentric vision dataset (four files) from

MyCourses. You will need to unzip all four zip files into one folder, which will then contain 10 subfolders. These 10 subfolders correspond to the 10 categories, which are activities you need to classify. For each category, treat half the videos as training and half as testing, where you round down if needed to use less data in training. You do not need to process every frame. I suggest skipping n frames, and you should make n a tunable parameter.

You need to devise an algorithm for video classification. Here are a couple ideas:

- Extract CNN features from each frame and then create a bag-of-words representation for a video (basically a histogram of CNN features). You can then just train a classifier.

- Extract CNN features from each frame and then convert the features for a given video to a single vector simply by summing them across frames and then normalizing them to unit length.

Report the mean per-class accuracy across the test dataset. Your method should get well above chance performance (35%+). If possible, do multiple runs of your algorithm with different train/test splits and compute the mean and standard deviation across runs.

The current state-of-the-art is about 75% on this dataset and incorporates both CNN features and optical flow features: https://arxiv.org/abs/1412.6505

NO HELP BEYOND CLARIFICATION QUESTIONS WILL BE PROVIDED

WITH THIS BONUS PROBLEM! Your grade will be proportional to how well your method works and the quality of your write-up. We will not give partial credit for a non-working solution.

Explain your method.

Solution:

Bonus Problem 2 - Object Detection

In this problem you are going to detect cat heads in the Pets dataset. The bounding box annotations are provided in PetsTrain.mat in the head field. The field is organized as

.

The head is not defined for all images, and a boolean flag in the variable headDefined indicates if the head is defined or not. The training images to use are given in the field catDetectorTrain and the testing images to use are given in the field catDetectorTest. There should be 800 training images and 2871 test images.

NO HELP BEYOND CLARIFICATION QUESTIONS WILL BE PROVIDED

WITH THIS BONUS PROBLEM! Your grade will be proportional to how well your method works and the quality of your write-up. Each part is potentially very time consuming to implement and to tune to make it actually work well. Do not expect partial credit for a non-working system.

You will find the vl pr function in VLFeat useful for computing average precision. You may also use vl plotbox.

Part 1 (10 points)

Implement a method of generating bounding box proposals using the segmentation algorithms we used earlier. You will need to over-segment the image and then merge the segments. You should generate a bounding box that encloses each segment.

Tune your method so that it has high recall, while having as few extra boxes as possible. One way you might do this is by throwing out boxes that are far outside of the distribution of box sizes observed in the annotations.

Explain how your method works. Tune your parameters to get over 90% recall of the animal faces, and report the recall you achieved and the average number of boxes generated per image. Show the output of all of the bounding boxes (blue) as well as the ground truth (green) on one of the images in the dataset.

Explain your method.

Solution:

Part 2 (10 points)

Using your function for generating bounding box proposals, implement a classification pipeline that classifies whether each box is a cat’s head or not. Use data augmentation by doing horizontal flips of the training cat heads. You may consider extracting CNN features from each box proposal (R-CNN) or extracting a bag-of-visual words. You should retrain your system at least twice using hard negatives.

You will need to devise a way to combine overlapping boxes using clustering or some non-maximal suppression scheme.

Show how well your method works on three test images (two with cats and one with a dog), show a precision-recall curve, and compute the average precision as defined by TREC, which you can get using vl pr. The function BBOverlap.m on MyCourses can be used to determine if a box is a hit or a false positive. Similar functions can be implemented or found for Python.

Explain your method.

Solution:

[1] In the literature, running SIFT on RGB images is called RGB-SIFT and when using images in Gaussian space it is called C-SIFT.