Assignment 4 Written Practical Report

Assignment 4 relates to the specific course learning objectives 1, 2, 3 and 4.

- demonstrate applied knowledge of people, markets, finances, technology and management in a global context of business intelligence practice (data warehouse design, data mining process, data visualisation and performance management) and resulting organisational change and how these apply to implementation of business intelligence in organisation systems and business processes

- identify and solve complex organisational problems creatively and practically through the use of business intelligence and critically reflect on how evidence based decision making and sustainable business performance management can effectively address real world problems

- comprehend and address complex ethical dilemmas that arise from evidence based decision making and business performance management

- demonstrate the ability to communicate effectively in a clear and concise manner in written report style for senior management with correct and appropriate acknowledgment of main ideas presented and discussed.

The key frameworks, concepts and activities covered in modules 2–12 and more specifically modules 6 to 12 are particularly relevant for this assignment. This assignment consists of three tasks 1, 2 and 3 and builds on the research and analysis you conducted in Assignment 2.

Task 1 is concerned with developing and evaluating a model of key factors for predicting whether customers are likely to forfeit on a loan resulting in a loan delinquency for ACME Bank.

Task 2 is concerned with the key governance issues of security, privacy and ethics in the day to day use of a data warehouse which increasingly will incorporate unstructured big data.

Task 3 is concerned with performance management, and provides you with the opportunity to design and build a visual and interactive crime events dashboard to meet the decision making requirements of the City of San Francisco Police Department’s Crime Analysis unit with drill down capability using the Tableau Desktop software.

Note you must use RapidMiner Studio for Task 1 and Tableau Desktop for Task 3 in this Assignment 4. Failure to do so may result in the tasks Tasks 1 or 3 not being marked and you will be awarded zero marks.

Note carefully University policy on Academic Misconduct such as plagiarism, collusion and cheating. If any of these occur they will be found and dealt with by the http://policy.usq.edu.au/documents/14727PL USQ Academic Integrity Procedures. If proven, Academic Misconduct may result in failure of an individual assessment, the entire course or exclusion from a University program or programs.

Task 1 (Worth 35 marks)

The goal of Task 1 is to predict the likelihood of a customer forfeiting on a loan for ACME Bank, in other words the likelihood of a loan delinquency Hence the question we are trying to answer is: Is a customer likely to forfeit on their loan and become a loan delinquency for the ACME Bank.

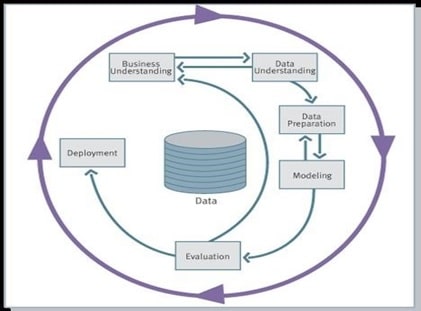

In Task 1 of this Assignment 4 you are required to follow the six step CRISP DM process and make use of the data mining tool RapidMiner to analyse and report on the credit scoring training data set loan-delinq-train.csv and credit scoring test data set loan-delinq-test.csv provided for Assignment 4. You should refer to the data dictionary for loan-delinq-train.csv (see Table 1 below). In Task 1 and 2 of Assignment 4 you are required to consider all of the business understanding, data understanding, data preparation, modelling, evaluation and deployment phases of the CRISP DM process.

Data dictionary for loan-delinq-train data set variables

|

Variable Name |

Description |

Type |

|

SeriousDlqin2yrs |

Person experienced 90 days past due delinquency or worse |

Y/N |

|

RevolvingUtilizationOfUnsecuredLines |

Total balance on credit cards and personal lines of credit except real estate and no installment debt like car loans divided by the sum of credit limits |

percentage |

|

age |

Age of borrower in years |

integer |

|

NumberOfTime30-59DaysPastDueNotWorse |

Number of times borrower has been 30-59 days past due but no worse in the last 2 years. |

integer |

|

DebtRatio |

Monthly debt payments, alimony,living costs divided by monthy gross income |

percentage |

|

MonthlyIncome |

Monthly income |

real |

|

NumberOfOpenCreditLinesAndLoans |

Number of Open loans (installment like car loan or mortgage) and Lines of credit (e.g. credit cards) |

integer |

|

NumberOfTimes90DaysLate |

Number of times borrower has been 90 days or more past due. |

integer |

|

NumberRealEstateLoansOrLines |

Number of mortgage and real estate loans including home equity lines of credit |

integer |

|

NumberOfTime60-89DaysPastDueNotWorse |

Number of times borrower has been 60-89 days past due but no worse in the last 2 years. |

integer |

|

NumberOfDependents |

Number of dependents in family excluding themselves (spouse, children etc.) |

integer |

- Research credit scoring variables in the loan-delinq-train data set to determine key factors influencing the likelihood that a customer will forfeit on a loan and become a loan delinquency. This will provide you with a business understanding of the dataset you will be analysing in Assignment 4 Task 1. Identify which variable/s can be omitted from your credit scoring and loan forfeit data mining model and why. Comment on your findings in relation to the key factors likely to indicate that a customer will forfeit on a loan and become a loan delinquency for ACME Bank (about 1000 words).

- Conduct an exploratory analysis of the loan-delinq-train.csv data set. Are there any missing values, variables with unusual patterns? How are the characteristics of the training data set loandelinq-train.csv consistent with the test data set loan-delinq-test.csv? Are there any interesting relationships between the potential predictor variables and your target variable SeriousDlqin2yrs? Is a customer likely to forfeit on a loan and become a loan delinquency? (Hint: identify the variables that will allow you to reduce the data set into a smaller subgroup and more parsimonious model). Comment on what key variables in the data set loan-delinq-train.csv might influence differences in the likelihood of loan delinquency occurring for a customer of ACME Bank (About 250 words).

- Run a decision tree analysis using RapidMiner. Consider what variables you will want to include in this analysis and report on the results. (Hint: Identify what is your target variable and what are your predictor variables?) Comment on the results of your final decision tree model (About 250 words).

.

- Run a logistic regression analysis using RapidMiner, Again consider what variables you will want to include in this analysis and report on the results (Note for the logistic regression analysis you will need to use the weka extension and w-logistic operator). (Hint: Identify what is your target variable and what are your predictor variables?) Comment on the results of your final logistic regression model (About 250 words).

- Based on the results of the Decision Tree analysis and Logistic Regression analysis - What are the key variables and rules for predicting whether a customer of ACME Bank is likely to forfeit on their loan and become a loan delinquency will have true (1) or false (0) outcome? (Hint: with RapidMiner you will need to validate your models on the loan-delinq_train.csv data using a number of validation processes for the two models you have generated previously using decision trees and logistic regression analysis models). Comment on your two predictive models for predicting the likelihood of a customer forfeiting on a loan to a false/positive matrix, and ROC chart (Note: these outputs can be easily obtained from the relevant performance operator in RapidMiner. Comment on the results of your final model (About 250 words).

Overall for Task 1 you need to report on the output of each analysis in sub task activities and briefly comment on the important aspects of each analysis and relevance to bank customer behaviours and propensity to forfeit on a loan and become a loan delinquency (Note: you will find the North text book an invaluable reference for completing the data mining process activities) (about 2000 words overall for Task note we have indicated for each sub task roughly how many words should be provided in a written explanation).

Note the important statistical outputs from your data mining model analyses in RapidMiner should be included as appendices in your Assignment 4 report to provide support your conclusions reached regarding each analysis for Task 1 and are not to be included in the word count

Task 2 (Worth 20 marks)

- Reflecting on the logical data warehouse you designed in Assignment 2 Task 2 you should now consider how you will ensure the governance of this data warehouse which will includ unstructured big data. Your discussion should focus on the controls that you would put in place to ensure that there is an appropriate level of security and privacy for the information captured, stored and retrieved for decision making when using the proposed data warehouse (about 1000 words).

- Discus some of the key ethical concerns for the day to day use of a data warehouse given that increasingly some decision making might become machine-to-machine automated decision making in response to events in a workflow. Identify ways in which governments and legislation are keeping pace with this phenomena of big data and data driven decision making and the lessening of human intervention in this process (about 500 words).

Task 3 (Worth 35 marks)

San Francisco Police Department Crime Events Dashboard

San Francisco Police Department are responsible for enforcing law and order in the City of San Francisco. The 13th most populous city in the United States with a population of over 850,000 in the main city boundary and a population density of over 7,000 people per sq. km. They would like to have a Crime Events dashboard built for the City of San Francisco with the aim of giving them a better understanding of the patterns that are occurring in relation to different crimes across the 10 Police Department districts in the city. In particular, they would like to see if there are any distinct patterns in relation to (1) types of crimes, (2) frequency of each type of crime across each of the 10 Police Department districts from the years 2003 through to 2015 (note that the year 2015 is not complete). This Crime Events dashboard will allow the San Francisco Police Department to manage and coordinate their efforts in catching the perpetrators of these crimes and be more proactive in preventing these crimes from occurring in the first place. The San Francisco Police Department hope that by being able to identify crime hotspots and trends for particular types of crimes across the 10 Police Department districts that they can be proactive and strategic in their efforts and actually reduce the occurrence of crime and make the city a safer place for its residents.

The San Francisco Police Department Crime Analytics Unit want the flexibility to visualize the frequency that each type of crime is occurring over time across each of the 10 Police Department districts in the City of San Francisco. They want to be able to get a quick overview of the crime data in relation to the category, location and frequency with which each crime is occurring over time and then be able to zoom and filter on particular aspects and then get further details as required. The data has been extracted from the City of San Francisco Police Department crime events data sources for the purposes of this Assignment 4.

For Task 3 you need to create

(a) A visual dashboard (Crimes Event Dashboard) to satisfy the requirements of the City of San Francisco Police Department ‘s Crime Analysis Unit to be proactive and strategic in their efforts and actually reduce the occurrence of crime and make the City a safer place for its residents for the following data set (sfpd-crimedata-2003-2015.csv). This dashboard consists of four specified crime analysis reports to be viewed at the City of San Francisco Police Department District levels visually and in terms of the numeric data concerning crime events:

- Top 10 most frequently committed crimes by year and by Police Department district

- Top 10 least frequently committed crimes by year and by Police Department district

3 The most improved Police Department District in terms of crime statistics (frequency of committed crimes) over the last thirteen years

- A summary of the crime statistics for a given crime, Police Department district in the City of San Francisco for a given year

- Note for the challenge part of Assignment 4 Task 3 it is possible to create a geomap representation of this crime data that can be imported and incorporated into your City of San Francisco Police Department Crime Analysis Dashboard but this will require you to have a look at openstreetmap data https://www.openstreetmap.org/ and capture a visual layered map of the City of San Francisco and determine a way to import this data into Tableau using a format and vendor that Tableau recognizes using another map visualization tool such as Mapbox https://www.mapbox.com).

You should briefly discuss the key findings for each of these reports in your Crimes Event Dashboard

- Provide and discuss your rationale (drawing on the relevant literature) that has informed the graphic design and functionality that is provided in your dashboard for the City of San Francisco Police Department Crime Analysis unit, in terms of how it meets their requirements for four specified crime analysis reports (About 1000 words). You will need to submit your Tableau workbook in .twbx format which contains your dashboard as a separate document to your main report for Assignment 4.

Report presentation, and quality of discussion and argument for each task appropriately supported by relevant number of references (10 marks)

The assignment 4 report must be structured as follows:

- Cover page for assignment 4 report

- Executive summary

- Table of contents

- Body of report – main sections and subsections for each Task and sub task such as

Task 1 sub task a) etc…

Task 2 task

Task 3 sub task etc

- List of References

- Appendices to accompany Task 1 data mining analyses