KMP algorithm Assignment Help

1. Knuth-Morris-Pratt Introduction

Knuth-Morris-Pratt also called KMP is a pattern matching algorithm.

There are various other algorithms which are used for pattern matching. For example, Rabin Karp algorithm, Boyer Moor etc. KMP is one of them.

First, we need to understand, what is pattern matching algorithm?

1.1 Pattern matching algorithm

Let’s say we have a large text T containing words [1…..n] and we need to find the occurrence of pattern P [1..m] in this text T. To accomplish this task, we need some algorithms. Those algorithms are called pattern matching algorithms and KMP is one of them. But here two questions arise:

- Why do we need a separate algorithm for this task?

- What is the application of pattern matching? Where do we need pattern matching?

KMP algorithm Assignment Help By Online Tutoring and Guided Sessions at AssignmentHelp.Net

Firstly, we’ll see the role of pattern matching.

1.1.1 Why do we need Pattern matching?

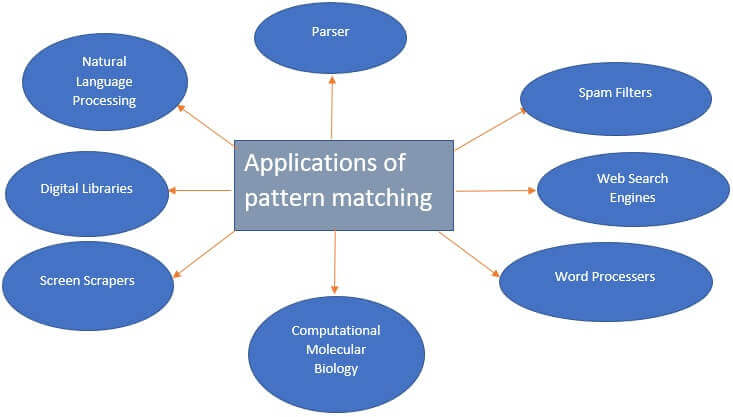

In this section, we’ll talk about the applications of pattern matching. In daily life, whenever we search a particular string in notepad or word file, this search utility is the result of pattern searching algorithms. And there are unlimited areas where we are using the concept of pattern matching, we have listed some of them in the following figure.

1.1.2 Why do we need different algorithms?

As we saw in above figure, the importance of pattern matching. There is also an important detail about pattern matching which we should know. It is not necessary that every time people will search only text. They may want to search different types of data like audio, video, image etc. So tackle with different data types, we need better algorithms. After finding the location of matching pattern, it can also be used to substitute some component of that pattern with another string sequence that is first search then replace.

2. The Naïve Method

Let’s first study the naïve method for doing this. The idea is to take each word and compare it with the pattern.

{`

Pseudocode for this method

Function match(text[], pattern[])

{

// n is size of text

// m is size of pattern

for(i = 0; i < n; i++)

{

for(j = 0; j < m && i + j < n; j++)

if(text[i + j] != pattern[j])

break; //doesn’t match, break the inner loop

if(j == m) // match found

}

}

`}

As we know, the problem of pattern matching is like a needle in haystack and If the length of the text is n and that of pattern is m. Then, it will take O(n*m) iterations to complete the task. In that case, the time complexity for worst case is O(mn). That is why, this method is impractical.

3. KMP algorithm

This algorithm was developed by Donald Knuth, Vaughan Pratt and James H. Morris in 1977. The idea was to search occurrences of a word within a text by using the result of previously matched characters. It uses the observation through which if a mismatch occurs, the word itself will provide sufficient information to determine where we’ll find the next match. At high level, there is a similarity between KMP and the naïve algorithm. In both the cases, the scanning is done in the same order that is from 1 to n-m, where n is the length of the text and m is the length of the pattern. But in KMP algorithm, it uses the information obtained by the previous occurrences of the pattern in the whole text. By using this information, it is able to skip those parts of the text which cannot contain the pattern we are searching. If we can calculate that how much we can shift in advance, then we can reduce the number of iterations. We have explained this in the following example:

{`

Position: 1 2 3 4 5 6 7 8 9 10 11 12 13 14

Text (t): a b a b a a b b a b a b b a

Pattern (p): a b a b b

a b a b b

a b a b b

a b a b b

`}

Start from the very first position, here the pattern is matched up to position 4th. At position 5 mismatch occurred, so shifting the pattern one place will not do any good. We also need to match the pattern at position 3 with the text at position 5, so as to make sure we don’t miss any matches. We’ll continue matching from position 5. Then, we encounter another mismatch at position 6. This shifting does not work. This is because, we did not consider the fact that ab is repeating itself in the pattern, thus only two places shift resulted in mismatch. Since the condition pattern at position 1 pattern at position 4 is satisfied. Thus, we take a shift of three places and resume matching at position 6 and here we find mismatch at position 8. Then again, we shifted the pattern three places. And finally, we find the pattern at position 9 of the text.

The naïve algorithm would have taken 23 iterations, but this algorithm will give result in 15 iterations.

Now, we’ll mathematically deduce the above explained shift:

Let A[1….m] be the array which gives the information that how much shift do we need. This array is called Prefix array, it stores the information of previous matches in such a way that will exactly tell us the position where we have to jump for finding the match. The length of this array is equal to the length of the pattern. For example, If this array at any column contains the value 1. That means the sequence up to which the text matched contain the same prefix and suffix of length 1. The length of the longest proper prefix in the pattern that matches a proper suffix in the same pattern. So, we can shift accordingly. In other words, we do not need to backtrack on the text.

A[1]=0

A[i] = max (s| (s=0) or (p[1… s-1] = p[i-s+1 .. i-1] and p[s] p[i])), the maximisation of s is needed that means minimised shift. So, that, no occurrence of pattern will be missed3.1 Matching algorithm

{`

1. i=1; j=1;

2. while (i<=m) and (j<=n) do begin

3. while (i>=0) and (p[i]<>[j])

4. do i=h[i];

5. i=i+1; j=j+1

6. end

7. if i <= m then print(“pattern is not found in the text”)

8. else print(“found at”, j-i+1)

`}

Let the function f(i) be defined by

{`

f(1) = 0

f(i) = max{ s | (1 <= s < i) and (p[1 .. s-1] = p[i-s+1 .. i-1]) }

`}

Both the definition of A[i] and f(i) are almost same. But in the case of A[i], we need to satisfy the condition of p[s] p[i] which is not needed in the case of f(i). So, in case of the prefix array, the pattern matching of p is on pattern itself. We’ll use this function f(i) in next section.

3.2 Prefix array

In this section, we’ll write algorithm for computing prefix array that is A[i].

{`

1. t=0; A[1]:=0;

2. for i=2 to m do begin

3. while (t>0) and (p[i-1]<>p[t]) //t=f(i-1)

4. do t=A[t];

5. t:=t+1;

6. if p[i]<>p[t] //t=f(i)

7. then A[i]:=t else A[i]:=A[t]

8. end

`}

Explanation of the above algorithm:

We are proceeding from left to right. In algorithm, we mentioned that t=f(i-1) in line 3. Then, in that case, we can extend the matched portion by 1 (line 5).

If p[t] p[i-1], we slide the pattern over itself until we find p[t]=p[i-1] and we can then extend the matching portion.

In line 7, the condition satisfied, so A[i] is set to t.

4. Example illustrating KMP algorithm

{`Pattern p = a b a b b

i = 2, at line 7 t=1,

p[1]<>p[2],

f(2) = 1, A[2]=1

i

a b a b b

a b a b b

t

i = 3, t=1 at line 4,

p[1]<>p[2],

t=A[1]=0, at line 7, t=1, f(3)=1, p[1]=p[3], A[3]=0

a b a b b

a b a b b

i = 4 t=1 at line 4. p[3]=p[1], t:=t+1, t=2.

At line 7, p[4]=p[2], A[4]:=A[2]=1

i = 5 t=2, f(4)=2. At line 4, p[4]=p[2], t=t+1=3. f(5)=3.

At line 7, p[5]<>p[3],

A[5]=t=3

Finally we have

i | 1 2 3 4 5

------------------------------

pat | a b a b b

f | 0 1 1 2 3

A | 0 1 0 1 3

`}

5. Analysis of KMP algorithm

Creating prefix array is a pre-processing task and if the length of pattern is m. Then to execute that algorithm, the running time will be O(m), the space complexity will also be O(m) and in second algorithm, the running time will be O(n) where n is the string length in the text.

So, the total time complexity for this algorithm is O(m+n).